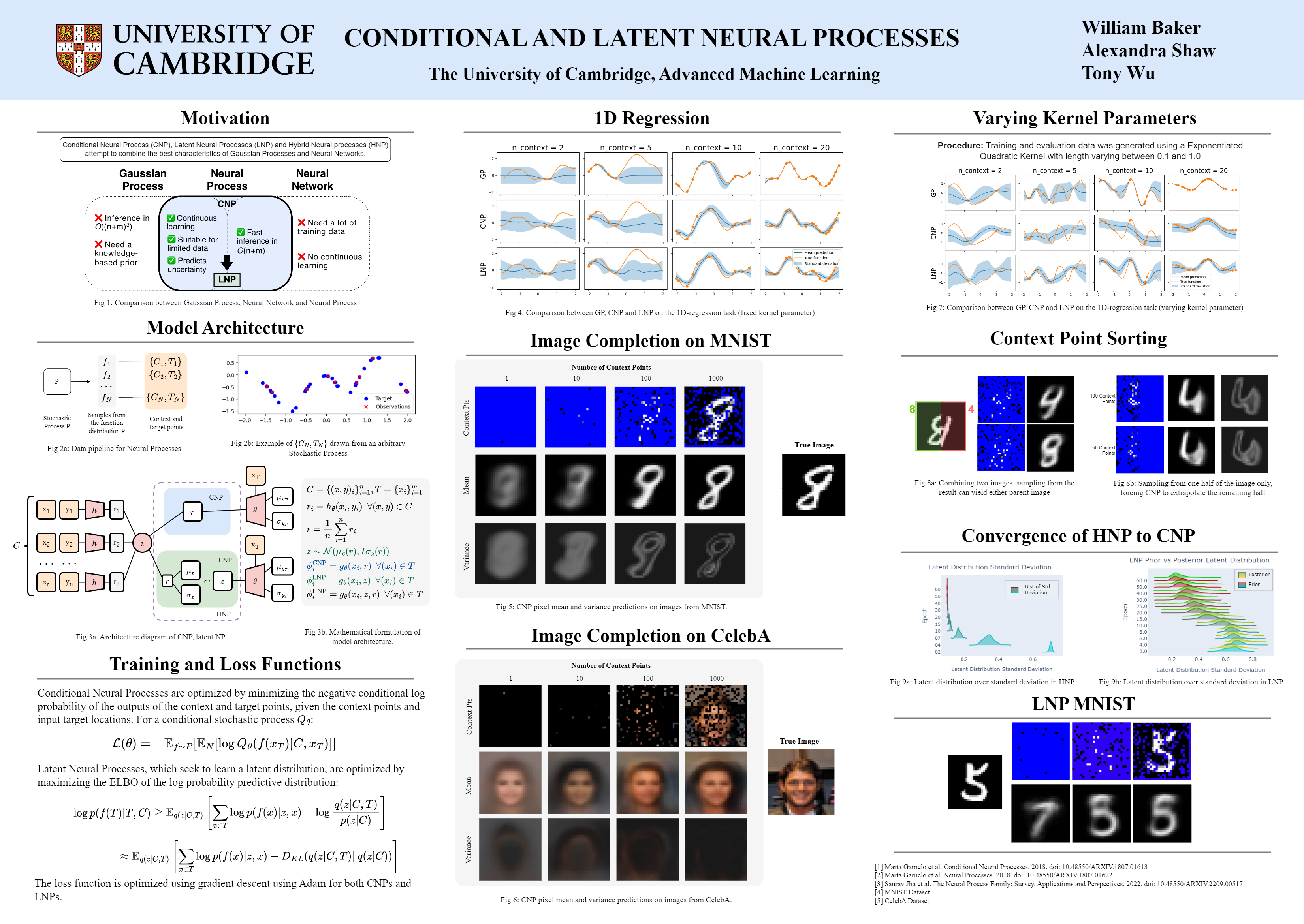

In my Cambridge studies I implemented a number of Neural Process architectures in tensorflow as part of an Advanced Machine Learning group project with Tony Wu and Alexandra Shaw.

Neural Processes are the deep learning counter part of Gaussian Processes suited to accurate representations of uncertainty over large datasets with high inference speeds at the cost of being much harder to train.

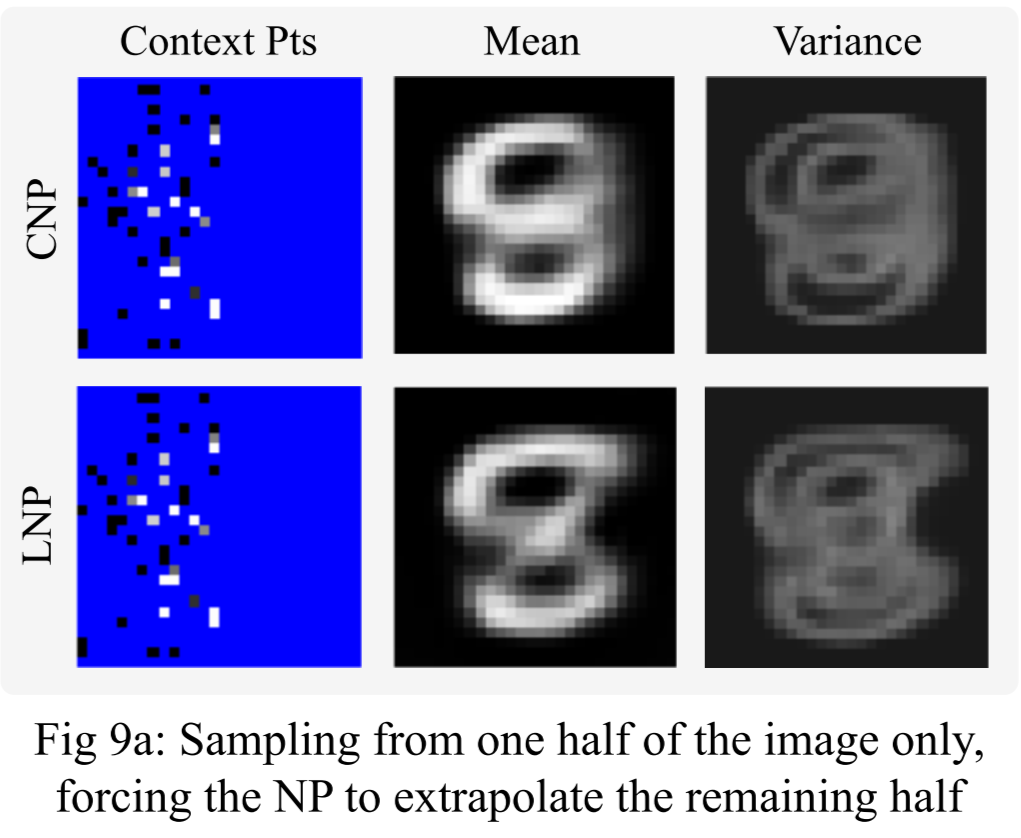

We investigated two architectures, the Latent Neural Process (LNP) and Conditional Neural Process (CNP). Both are MLP encoder decoder architectures, however LNP's use a non deterministic Gaussian latent space between the encoder and decoder which encourages a more sophisticated decoder that can produce sharp predictions given noisy inputs, commonly used to model weather data.

Both architectures provide a mean and variance prediction at every target location allowing uncertainty to be considered in our predictions.